Technology and also daily media is full of stories about how artificial intelligence (AI) will change our lives and are already doing it today. Andrew Ng, chief scientist and leading deep learning researcher at Baidu Research, was recently cited as follows: “AI is the new electricity ... Just as 100 years ago electricity transformed industry after industry, AI will now do the same.” 1

AI and deep learning algorithms have already found their way into our daily lives through digital assistants on our smart phones capable to recognize human speech, the selection of news stories according to our own personal interests, and the recognition of the names of our friends in Facebook images.2

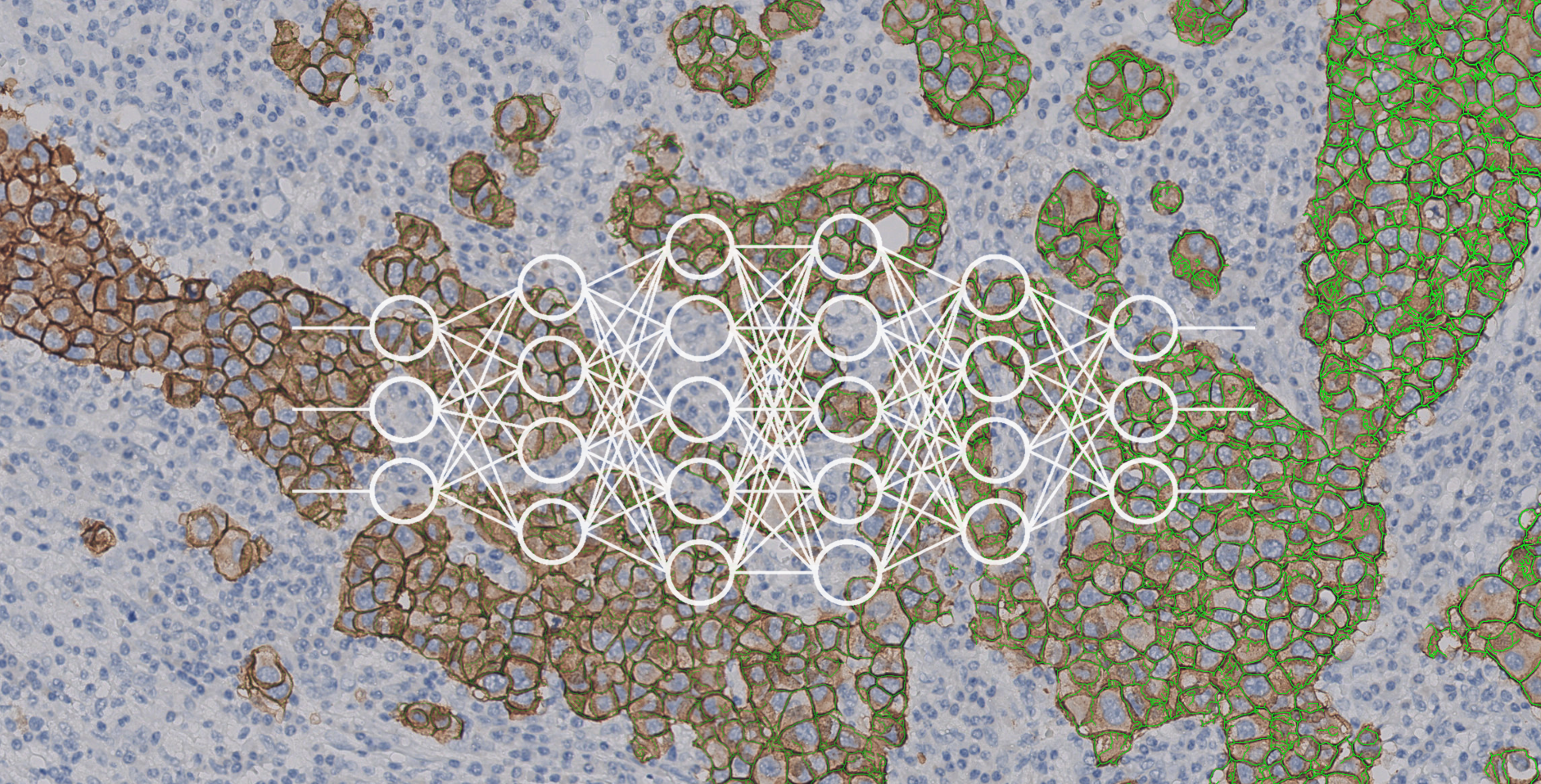

While there is potential and interest in AI from almost every industry, healthcare and life science are one of the main industries apart from the original tech industry that is adopting these technologies today for applications like drug development or medical image analysis e.g. performed on digital whole slide images scanned from histological sections.3 In recent months we have seen a number of promising results using artificial intelligence for e.g. cancer detection on WSI both in scientific publications (overview in Part 3) and in commercial efforts by digital pathology vendors.

Invasive ductal carcinoma (IDC) segmentation using deep learning (probability heatmap), Source: Andrew Janowczyk

The technology that is the enabler of the latest AI breakthroughs is called Deep Learning. The basic idea behind deep learning is to enable machines to learn e.g. speech or image recognition by themselves based on a set of training data without a human programmer “teaching them” the features to look for. This is achieved by using deep neural networks - a way of learning inspired by the human brain. Spending the last two years with a toddler witnessing the absolutely amazing development of new human brain learning how to speak, walk and name all animals in the local zoo at an incredible speed I find it hard to believe that any kind of algorithm running on a machine could ever reproduce this. So let’s take a closer look at the state of the art and what today’s algorithms are capable of.

In pathology deep learning could help computers look at tissue and recognize patterns such as a tumor more like a pathologist by providing them with the proper training. By this deep learning could help to overcome existing restrictions in digital image analysis such as dependency on human designed features. So could deep learning help image analysis to become the killer app for digital pathology? Of course we cannot give a definite answer to this question today. But with all the opportunity for pathologists and patients in mind, it is a good time to take a closer look at some of the technical concepts behind deep learning without digging into all the math behind it, the reasons for the latest revolution (Part 1), their application to image recognition (Part 2) and some of the possible applications in digital pathology and then how technology might impact pathology in general (Part 3).

Part 1: AI, Machine Learning and Deep Learning in a nutshell

Artificial Intelligence, Machine Learning and Deep Learning are often mentioned in the same context. Let’s look at some definitions to clarify. AI is “the science and engineering of making intelligent machines, especially intelligent computer programs.”4 Machine Learning is a field within AI and the science of getting computers to act without being explicitly programmed.”5 Deep Learning is a specific technology for machine learning utilizing layered, so called “deep” neural networks and vast quantities of data to discover the intricate structure in large data sets. A network once trained on a training data set can be applied to the same type of data. These methods have dramatically improved the state-of-the-art in speech recognition, visual object recognition, object detection and many other domains such as drug discovery and genomics.6 7

Feature Design

One of the big challenges with traditional machine learning models using handcrafted algorithms is a process called feature design. The human programmer needs to tell the computer what kind of things it should be looking for to make a decision. This places a huge burden on the programmer, and the algorithm's effectiveness relies heavily on how insightful the programmer is. For complex problems such as diagnosing cancer on histology sections this is a huge challenge, that requires basically the knowledge of experienced pathologists. Deep learning is a method to overcome the need for feature extraction. The models are capable of learning the right features by themselves with the proper configuration by the programmer and a proper set of training data. Deep learning algorithms are even capable of finding features which no human could have been able to devise by himself. In the first two layers features often have some relation to attributes human have devised in the past. On deeper layers in the network the features become so bizarre, that no human could have possibly been able to devise these. This makes deep learning an extremely powerful tool for all kinds of applications that involve pattern recognition.

How does it work (apart from all the math)

One application of neural networks is the classification of objects, especially the recognition of complex patterns. A classifier takes the input and computes a confidence score that indicates if an object belongs to a certain category or not e.g. if an image contains a cat or not. A neural network can be seen as a combination of classifiers structured in a layered web that consists of an input layer, an output layer and multiple hidden layers in between. The network combines the scores of each of the activated neurons with certain weights and biases leading to the output which is again a confidence score that e.g. an object belongs to a certain category. This process is called forward propagation. The accuracy of the network’s prediction is improved by training. During the training process, weights and biases are adjusted in a way that the predicted output gets as close as possible to the actual output. This process, called backward propagation, starts with the output and works backwards through the network.

Neural networks are called “deep” when they consist of lots of hidden layers instead of just one or two. This enables them to recognize complex patterns such as a human face in an image. The first layer would detect simpler patterns like edges, the next layers would combine them to larger structures like a nose followed by more complex patterns such as a human face on the next layer. These deep networks were inspired by the deep architecture of human brains. Due to their complexity deep networks need a lot of computing power typically provided by large Graphical Processing Units (GPU).

Why is it all happening right now?

- Availability of large amounts of labeled data such as the ImageNet Database

- and massive computing power provided by large GPUs

Deep Learning Timeline

Learning Resources

If you want to learn in more detail about deep learning, machine learning and neural networks, there are a variety of resources freely available online. Here are some recommendations that are easy to understand, even if you don’t have a computer science background:

- A Very Short History Of Artificial Intelligence (AI): A more comprehensive but still compact and entertaining overview of the field of AI was recently published by Forbes.

- Deep Learning Simplified : An easy to understand video series about major concepts that does not involve any code or math

- Yann LeCun, Yoshua Bengio & Geoffrey Hinton: Deep learning, Nature, Volume 521, Number 7553, 436–444, 2015.

More technical:

In part 2 of our series we will take a closer look at deep learning methods for image recognition and the current state of the art. Part 3 will focus on how these methods can be applied to digital pathology image analysis and how this might impact pathology in general.

1 The AI Revolution: Why You Need to Learn About Deep Learning

2

An exclusive inside look at how artificial intelligence and machine learning work at Apple

3 Accelerating AI with GPUs: A New Computing Model

4 John McCarthy, What is artificial Intelligence? (2007).

5 Andrew Ng, Machine Learning, Course on Coursera

6 Yann LeCun, Joshua Bengio & Geoffrey Hinton, Deep learning, Nature 521:436 - 444 ( 2015).

7 The AI Revolution: Why You Need to Learn About Deep Learning, Forbes, 2016.

8 Geoffrey Hinton, Neural Networks for Language and Understanding, Creative Destruction Lab Machine Learning and Market for Intelligence conference (Toronto, 2015).